FYI: You can get the Notebook for this article on my github and experiment yourself (opens with Azure Data Studio).

Preamble

Everybody knows that using loops in SQL Server is not efficient, if you’re able to write that same logic in a set-based statement it’s guaranteed to be faster.

Still, devs can’t be helped, you just can’t seem to nail down the set-equivalent statement for the loop, or, simply, you have to write a loop because the set-statement is too complicated for other members of the team to maintain and you have to bind your query powers for a greater good.

The Source of the issue (The DONE Token)

So, unavoidable loops, how do we mitigate the issue?

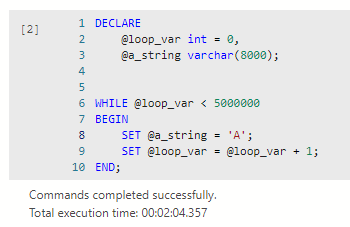

Let’s see what’s going on in this simple loop:

DECLARE

@loop_var int = 0,

@a_string varchar(8000);

WHILE @loop_var < 5000000

BEGIN

SET @a_string = 'A';

SET @loop_var = @loop_var + 1;

END;

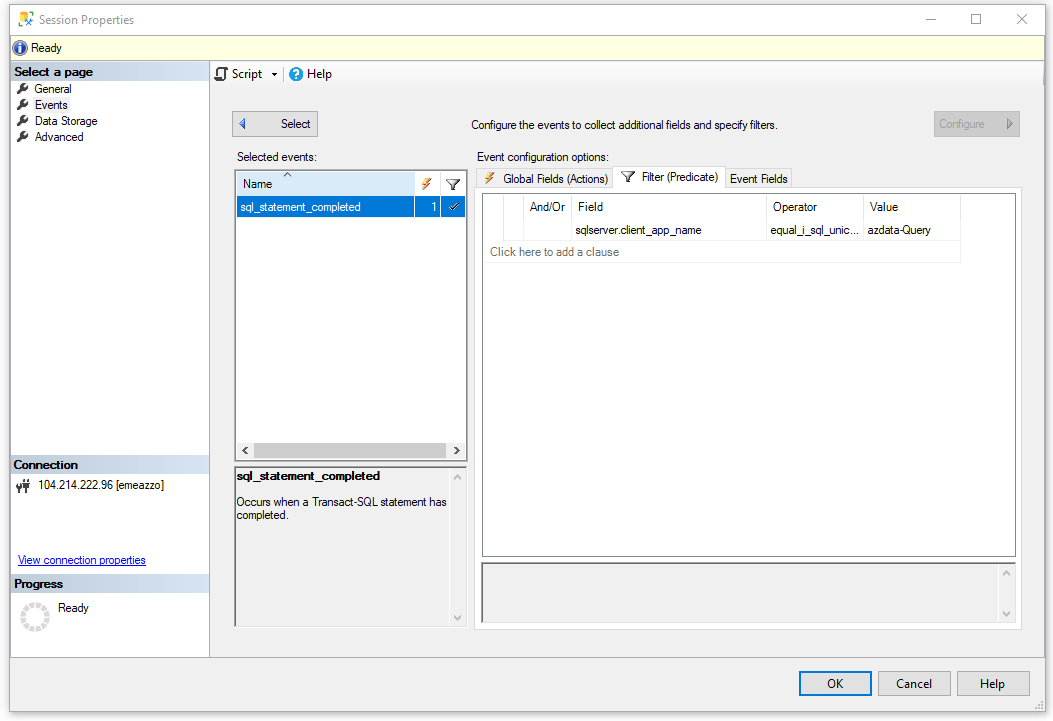

Not everybody knows that SQL Server sends a DONE Token to the client each time that a SQL statement completes (so, everything except variable declarations); For the query above you can basically track it with extended events by tracking the “SQL Statement Completed” event.

What happens in a loop? For each statement that’s completed, a token is sent, which means that for this loop that contains 3 statements (the WHILE loop itself it’s a statement) 15 Million tokens are sent to the client.

Let’s verify this:

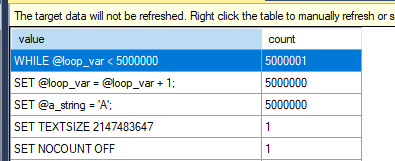

After running the query above, you’ll see the following, each statement has been executed 5 Million times (+1 for the loop constructor itself)

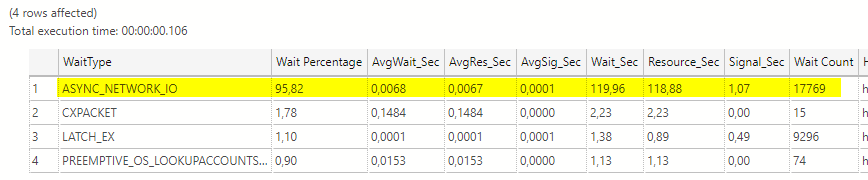

By tracking the wait stats, we can confirm that indeed the query completion is held back by ASYNC_NETWORK_IO, which means that the client/network is struggling to keep pace with the rate of which DONE Tokens are sent; Since we’re not returning any data in the batch/loop, the only thing handled by the client are the Tokens.

Network plays a big part in this, if you try to execute the sample query above with both the server and client in the same machine you probably won’t see as much waits and the query will complete much faster. This is why ofted devs think that everything it’s ok when they’re testing the code locally but everything burns when deployed. #propertesting

How to fix it

Paul White has suggested a couple of ways to get around this:

NOCOUNT is a well known set option to avoid sending useless messages to the client, saving time and bandwidth, the BOL page states:

SET NOCOUNT ON prevents the sending of DONE_IN_PROC messages to the client for each statement in a stored procedure. For stored procedures that contain several statements that do not return much actual data, or for procedures that contain Transact-SQL loops, setting SET NOCOUNT to ON can provide a significant performance boost, because network traffic is greatly reduced.

However, setting NOCOUNT ON at the beginning of the batch above makes no difference, only DONEINPROC Tokens (as the name implies, these tokens are the same as the DONE Tokens, but for statements inside a SP) are suppressed, hence all the DONE tokens that are sent by the batch still come to the client slowing everything down, so, let’s try with a procedure:

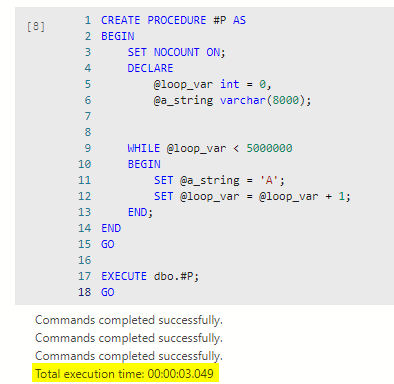

CREATE PROCEDURE #P AS

BEGIN

SET NOCOUNT ON;

DECLARE

@loop_var int = 0,

@a_string varchar(8000);

WHILE @loop_var < 5000000

BEGIN

SET @a_string = 'A';

SET @loop_var = @loop_var + 1;

END;

END

GO

EXECUTE dbo.#P;

GO

The procedure above is defined as a Temporary Procedure, which behaves as a Temp Table, it will be dropped when session referring to it is closed, but nothing impedes you from creating a standard stored procedure

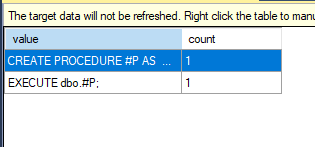

The “SQL Statement Completed” XEvent now shows only two statements:

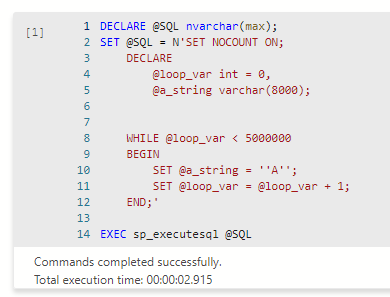

Another way you can get around this is with my favourite thing to play with, dynamic SQL:

Takeaway

It’s not ideal to have loops in SQL Server batches, however, if you have to, at least avoid overloading your client with “SQL Statement Completed”/”DONE” messages by using one of the techniques described above,

[…] Emanuele Meazzo shows one reason why loops can be so much slower in T-SQL: […]