I love extended events, and if you’re still using SQL profiler, go feel ashamed and come back only when you’ve stopped using it.

Now that you’re familiar with extended events, one of the common destinations for the tracked information are Extended Event Files, where simply put the events are saved to while they happen.

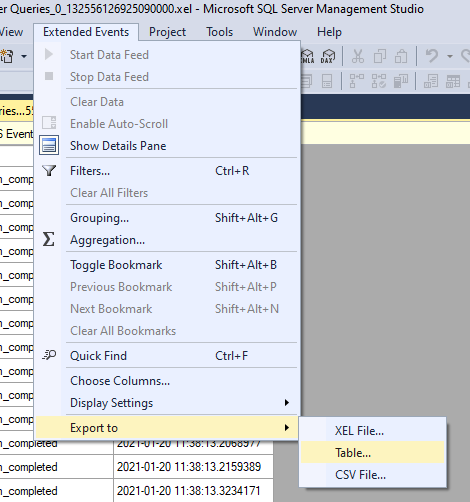

*.XEL files are easy to work with in SSMS, just double click on them and they’ll open up in Management Studio, and you have neat features too, like filtering, aggregations, ecc..

There’s even an export feature that allows you to export the contents to CSV/Table or another XEL file too (it makes sense, because you can export a subset of the original XEL events after filtering)

However, I’ve found myself in a tricky spot, as I had multiple instances recording events, and those events had to be analyzed as a whole.

I could have simply written a script to get the data from each instance, querying the system function sys.fn_xe_file_target_read_file and then uploading it somewhere else, but this approach has the issue of adding additional load on the source instances, and I didn’t want to add additional load to the already overworking instances I was trying to monitor.

I then wondered, can I move the files over from the busy servers and read them from another machine? I surely didn’t want to open each file manually and exporting it to a table and/or CSV in order to mash them together, too

Spoiler alert, if you read the title of this article, yeah, that why we’re here 🤷♂️

The code

Turns out that the SqlServer PowerShell module contains the cmdlet Read-SqlXEvent that has exactly this purpose

While is straightforward to display on-screen the information from a XEL file using that cmdlet, there are a couple of things to take care of when working with it programmatically, especially if the data needs to be flattened in order to be exported to a CSV file or a table (like in my case)

$DataXel = Read-SqlXEvent "\your\file.xel"

$XelTable = New-Object System.Data.DataTable

$XelTable.Columns.Add("Source","String") | Out-Null

$XelTable.Columns.Add("TimeStamp","DateTimeOffset") | Out-Null

$XelTable.Columns.Add("User","String") | Out-Null

$XelTable.Columns.Add("Database","String") | Out-Null

$XelTable.Columns.Add("physical_reads","int32") | Out-Null

$XelTable.Columns.Add("cpu_time","int32") | Out-Null

$XelTable.Columns.Add("logical_reads","int32") | Out-Null

$XelTable.Columns.Add("row_count","int32") | Out-Null

$XelTable.Columns.Add("duration","int32") | Out-Null

$XelTable.Columns.Add("writes","int32") | Out-Null

$XelTable.Columns.Add("batch_text","String") | Out-Null

foreach ($row in $DataXel) {

$arrayrow = $XelTable.NewRow()

$arrayrow["Source"] = $item.Name.Split('_')[0]

$arrayrow["TimeStamp"] = $row.TimeStamp

$arrayrow["User"] = $row.Actions["username"]

$arrayrow["Database"] = $row.Actions["database_name"]

$Fields = $row.Fields

foreach ($field in $Fields) {

try {

$arrayrow[$field.Key] = $field.Value

}

catch {

#Whatevs

}

}

$XelTable.rows.Add($arrayrow)

}

#Bulk Insert into SQL Server

, $XelTable | Write-SqlTableData -SchemaName "dbo" -ServerInstance "MySuperServer" -DatabaseName "DBabe" -TableName "XELsGOHere" -Force

Let’s examine what’s going on:

- I’m creating a DataTable and populating it with the content in the XEL file, since I then want to use it to upload the data into SQL Server, and that’s the nicest format to do so

- in the data table, I’m creating the columns that I’m actually interested in; there’s no need in fetching all the columns in the XEL nor anyone is forbidding you from computing some columns while loading, like I’m doing with “Source”

- Each single row is created in the foreach loop and added to the DataTable

- Timestamp it’s a bit of an outlier, as it’s stored directly at the row level of the XEL object, as shown

- As “fields” and “actions” are in different levels when you configure an Extended event, they are too in the XEL file

- Actions are stored in the Actions hash table in each $row of the XEL data

- Fields are stored in the Fields hash table in each $row of the XEL data

- In my demo code I’m showing both a manual mapping (for actions) and an automatic mapping (for fields), it just depends on how do you want to call the names of the DataTable

- The comma before the $XelTable object on the last row it’s not a typo, but it’s pretty important for performances; it tells powershell to pipe through the whole object to Write-SqlTableData (which btw it’s still part of the SQL Server module) which is then able to bulk load the data to a table, otherwise it would have passed through (and then uploaded) one excruciating row at the time

Ending Words

Read-SlqXEvent is just one of the neat gems that you can find in the SQLServer Powershell module, wich together with dbatools allows you script pretty much anything around SQL Server

This specific cmdlet allows you to read streaming data from the sessions themselves too, if you’re interested in something like that, check out what my fellow compatriot Gianluca Sartori has created as a .NET application here.

[…] Emanuele Meazzo shows how to work with Extended Event *.xel files: […]

Tried using powershell script and it works, except one of my Columns is coming is as NULL. when I opened the .xel file in ssms before running the script, the field has a value. The field is “action_name” and an example value is “DATABASE AUTHENTICATION SUCCEEDED”. Is there something I’m Doing wrong? Pasted it below.

$DataXel = Read-SqlXEvent “Downloads\test.xel”

$XelTable = New-Object System.Data.DataTable

$XelTable.Columns.Add(“client_ip”,”String”) | Out-Null

$XelTable.Columns.Add(“TimeStamp”,”DateTimeOffset”) | Out-Null

$XelTable.Columns.Add(“host_name”,”String”) | Out-Null

$XelTable.Columns.Add(“Action”,”String”) | Out-Null

$XelTable.Columns.Add(“server_principal_name”,”String”) | Out-Null

$XelTable.Columns.Add(“session_server_principal_name”,”String”) | Out-Null

$XelTable.Columns.Add(“database_principal_name”,”String”) | Out-Null

foreach ($row in $DataXel) {

$arrayrow = $XelTable.NewRow()

$arrayrow[“TimeStamp”] = $row.TimeStamp

$arrayrow[“Action”] = $row.Actions[“action_name”]

$Fields = $row.Fields

foreach ($field in $Fields) {

try {

$arrayrow[$field.Key] = $field.Value

}

catch {

#Whatevs

}

}

$XelTable.rows.Add($arrayrow)

}

#Bulk Insert into SQL Server

, $XelTable | Write-SqlTableData -SchemaName “dbo” -ServerInstance “pcname” -DatabaseName “DBAudit” -TableName “AuthenticationData” -Force

Ciao Glenn,

maybe it has something to do with data types, which version is your SQL Server instance?

Thanks Emanuele for sparing time to provide this insight

As I’m new to PowerShell, I have a few questions to clarify how to apply above code:

1. I see that the content of my XEL file is to be stored in the variable $DataXel, but I don’t see where the variable was used to retrieve the stored data

2. Do I have to create a corresponding table seperately in my SQL Server Database such as “XELGOHere”, or does the $XelTable variable automatically create the table in SQL Server database