Since about a couple of versions ago, Azure Data Studio introduced Jupyter Notebooks support for SQL Server (and with SQL Server 2019 “Big Data Clusters” on the horizon, it was about time)

What are Notebooks?

“Notebooks” are a tool that our data science/machine learning colleagues know, love and are at their disposal since some time (Python, Spark, etc…);

A notebook is an open-source format that includes both (executable) code and markdown in a single file, in order to have a continuous narrative (be aware that not all features are available in ADS as of now)

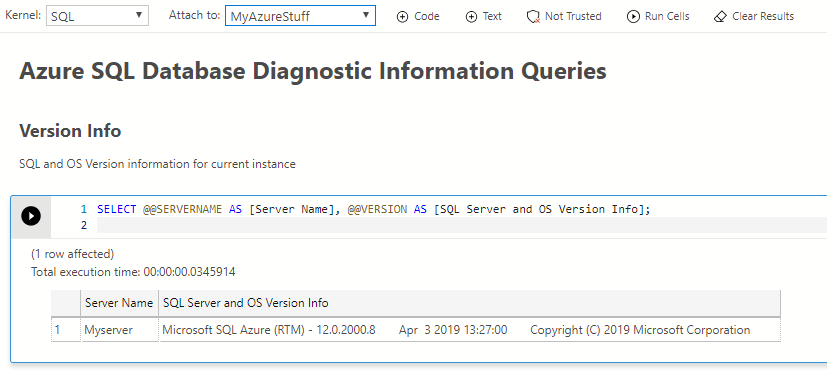

Now, Microsoft has implemented the SQL Kernel in ADS.

Moreover, SQL Notebooks aren’t limited to the latest version of SQL Server but you can connect to any supported SQL Server version.

I’ve personally used SQL Notebook in my day-to-day work for Data Analysis, as the possibility to tweak the code and run it in the notebook greatly enhances the presentation of the data as oppose to a commented SQL Script ,as you cannot see all the query results in the same page too as opposed to a notebook; Moreover, a notebook (with or without results) can be exported in a read-only format like html or pdf to share the info with third parties, i.e. you can automate an analysis process that include code to be shared, cool stuff.

Practical Examples

One of the straightforward usages of Notebooks for DBAs is Diagnostic: Imagine having a single file with various diagnostic queries, clicking a single button and all the queries gets executed one by one and the results saved in the same single file, which can be then analyzed off-site.

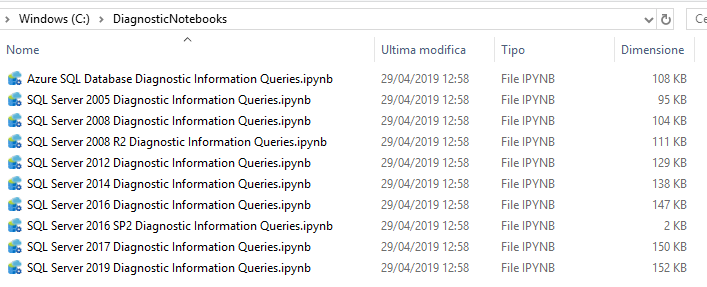

Taking as example what my fellow Italian Gianluca Sartori posted last month, I’ve built on it an automatic Powershell script that automatically downloads all the most recent Glenn Berry Diagnostic Scripts and creates the notebooks for you.

Just download this script and launch it passing the output directory parameter, like so:

& '.\Create Diagnostic Notebooks.ps1' C:\DiagnosticNotebooks\

This will create a folder containing all the diagnostic scripts currently available:

As the notebook themselves, you can run all the cells by clicking “Run Cells” in ADS, or, better yet, you can run the cell that you’re interested in by clicking the little “play” button that appears when you hover on the cell.

Powershell Nerd Details

In my approach, I’m pulling the most I can directly from Github using its APIs. In downloading the diagnostic scripts from My Repository (since Glenn doesn’t have one for these scripts) and the Invoke-DbaDiagnosticQueryScriptParser function, which is used to parse the diagnostic scripts, directly from the dbatools repository, without stashing all the code in my script:

[CmdletBinding()]

Param(

[parameter(Mandatory)]

[System.IO.FileInfo]$OutputPath

)

function DownloadFilesFromRepo {

Param(

[string]$Owner,

[string]$Repository,

[string]$Path,

[string]$DestinationPath

)

$baseUri = "https://api.github.com/"

$args = "repos/$Owner/$Repository/contents/$Path"

$wr = Invoke-WebRequest -Uri $($baseuri+$args)

$objects = $wr.Content | ConvertFrom-Json

$files = $objects | Where-Object {$_.type -eq "file"} | Select-Object -exp download_url

if (-not (Test-Path $DestinationPath)) {

# Destination path does not exist, let's create it

try {

New-Item -Path $DestinationPath -ItemType Directory -ErrorAction Stop

} catch {

throw "Could not create path '$DestinationPath'!"

}

}

foreach ($file in $files) {

$fileDestination = Join-Path $DestinationPath (Split-Path $file -Leaf) | ForEach-Object { $_ -replace '%20',' '}

try {

Invoke-WebRequest -Uri $file -OutFile $fileDestination -ErrorAction Stop -Verbose

"Grabbed '$($file)' to '$fileDestination'"

} catch {

throw "Unable to download '$($file.path)'"

}

}

}

#Download Diagnostic Scripts

DownloadFilesFromRepo -Owner "EmanueleMeazzo" -Repository "tsql.tech-Code-snippets" -Path "DMV/Glenn Berry Diagnostic Scripts" -DestinationPath "TempFolderForThisStuff"

#Download DbaTool's Invoke-DbaDiagnosticQueryScriptParser

DownloadFilesFromRepo -Owner "sqlcollaborative" -Repository "dbatools" -Path "internal/functions/Invoke-DbaDiagnosticQueryScriptParser.ps1" -DestinationPath "TempFolderForThisStuff"

#Load The Function

. .\TempFolderForThisStuff\Invoke-DbaDiagnosticQueryScriptParser.ps1

#Create the Notebooks

New-Item -ItemType Directory -Force -Path $OutputPath

$files = Get-ChildItem -Path "TempFolderForThisStuff\*.sql"

foreach ($file in $files)

{

$filename = $OutputPath.ToString() + "\" + (Split-Path $file -Leaf).Replace(".sql",".ipynb").ToString()

$Description = (Split-Path $file -Leaf).Replace(".sql","").ToString()

$cells = @()

$cells += [pscustomobject]@{cell_type = "markdown"; source = "# $Description" }

Invoke-DbaDiagnosticQueryScriptParser $file |

ForEach-Object {

$cells += [pscustomobject]@{cell_type = "markdown"; source = "## $($_.QueryName)`n`n$($_.Description)" }

$cells += [pscustomobject]@{cell_type = "code"; source = $_.Text }

}

$preamble = @"

{

"metadata": {

"kernelspec": {

"name": "SQL",

"display_name": "SQL",

"language": "sql"

},

"language_info": {

"name": "sql",

"version": ""

}

},

"nbformat_minor": 2,

"nbformat": 4,

"cells":

"@

$preamble | Out-File $filename -Force

$cells | ConvertTo-Json | Out-File -FilePath $filename -Append

"}}" | Out-File -FilePath $filename -Append

}

Remove-Item "TempFolderForThisStuff" -Force -Recurse

Takeaway

Azure Data Studio is ready to bring SQL Notebooks to the mainstream and uplift SQL Server in the Holy Data Science realm.

Many features are still missing in ADS like images support, graphs in query ouput (it’s a feature that’s enabled in standard queries, but not in notebooks), LaTeX markdown and especially copy and paste for query results (!) , but I’m sure that with time everything will be sorted out.

[…] Emanuele Meazzo takes a look at the current state of SQL Notebooks in Azure Data Studio: […]

[…] consultants another way to troubleshoot our beloved SQL Servers; I’ve already talked about diagnostic notebooks in the past, and now, since Azure Data Studio has implemented the feature, I wanted to group them […]