The Issue

If you ever tried to manage a PowerBI Tenant, you may have come across this problem:

A part the PowerBI success comes from the ability for BI people to self-serve the data it needs, you just provide them with the datasources that they need and they can go around creating all the reports that they want; this is also an issue when trying to manage the tenant, because by default the owner of the dataset is who published the report in the first place, which is usually the BI guy that created it.

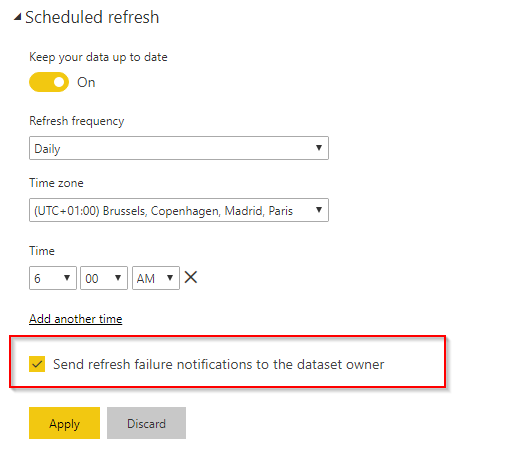

Where is the issue you ask? It’s sneaky, because if you schedule the dataset for refresh, and it fails for some reason, only the Dataset owner can get notified. No PowerBi admin, no Workspace Admins, only the dataset owner.

There is no way to configure a global alert or to alert someone else, if someone else wants to get the alerts it must take ownership of the dataset, which beats the purpose of a self-service BI tool and it’s definitely not a fun experience when there are hundreds of reports in your organization.

The Solution

Using the PowerBI PowerShell Cmdlets and the PowerBI REST Api we can interact with our tenant, get all the information we need and act on those.

- Log into the PowerBI service

- Get all the Workspaces in the Organization

- For each Workspace, get all the Datasets

- For each Dataset, if refreshable, fetch the available Refresh History using PowerBI REST APIs

- Parse the JSON results returned from the api formatting it in a nice tuple

- The data is ready to be acted on!

Proof of Concept

In this POC, I’ll write the results into a CSV file to be fetched back into PowerBI to create a report, this is to allow anyone to replicate the same POC; a decent approach for a final product would be to write the data into a Database, in order to keep the history somewhere too, as Microsoft doesn’t keep a complete history and the REST APIs return just about everything that’s available.

$myCred = Get-Credential

Connect-PowerBIServiceAccount -Credential $myCred

$Workspaces = Get-PowerBIWorkspace

$ExportFile = 'C:\Users\emeazzo\Desktop\Scripts\PowerBI\PowerShell\RefreshHistory\PowerBiRefreshHistory.csv'

Remove-Item $ExportFile -Force -ErrorAction SilentlyContinue

foreach($workspace in $Workspaces)

{

$DataSets = Get-PowerBIDataset -WorkspaceId $workspace.Id | where {$_.isRefreshable -eq $true}

foreach($dataset in $DataSets)

{

$URI = "groups/" + $workspace.id + "/datasets/" + $dataset.id + "/refreshes"

#$OutFile = $ExportFolder + '\' + $workspace.Name + '-' + $dataset.Name + '.json'

$Results = Invoke-PowerBIRestMethod -Url $URI -Method Get | ConvertFrom-Json

foreach($result in $Results.value)

{

$errorDetails = $result.serviceExceptionJson | ConvertFrom-Json -ErrorAction SilentlyContinue

$row = New-Object psobject

$row | Add-Member -Name "Workspace" -Value $workspace.Name -MemberType NoteProperty

$row | Add-Member -Name "Dataset" -Value $dataset.Name -MemberType NoteProperty

$row | Add-Member -Name "refreshType" -Value $result.refreshType -MemberType NoteProperty

$row | Add-Member -Name "startTime" -Value $result.startTime -MemberType NoteProperty

$row | Add-Member -Name "endTime" -Value $result.endTime -MemberType NoteProperty

$row | Add-Member -Name "status" -Value $result.status -MemberType NoteProperty

$row | Add-Member -Name "errorCode" -Value $errorDetails.errorCode -MemberType NoteProperty

$row | Add-Member -Name "errorDescription" -Value $errorDetails.errorDescription -MemberType NoteProperty

$row | Export-Csv -Path $ExportFile -Append -Delimiter ';' -NoTypeInformation

}

}

}

I’m using the following cmdlets:

- Connect-PowerBIServiceAccount to actually connect to the service in the first place

- Get-PowerBIWorkspace in order to get the list of the workspaces that my user has access to

- Get-PowerBIDataset in order to get the list of the datasets that are in a specific Workspace

As far as the REST APIs goes, I used Get Refresh History in order to get the refresh history of the specific dataset that I was fetching, given that I’m exploring iteratively each Dataset in each Workspace the rest URI builds itself.

The report

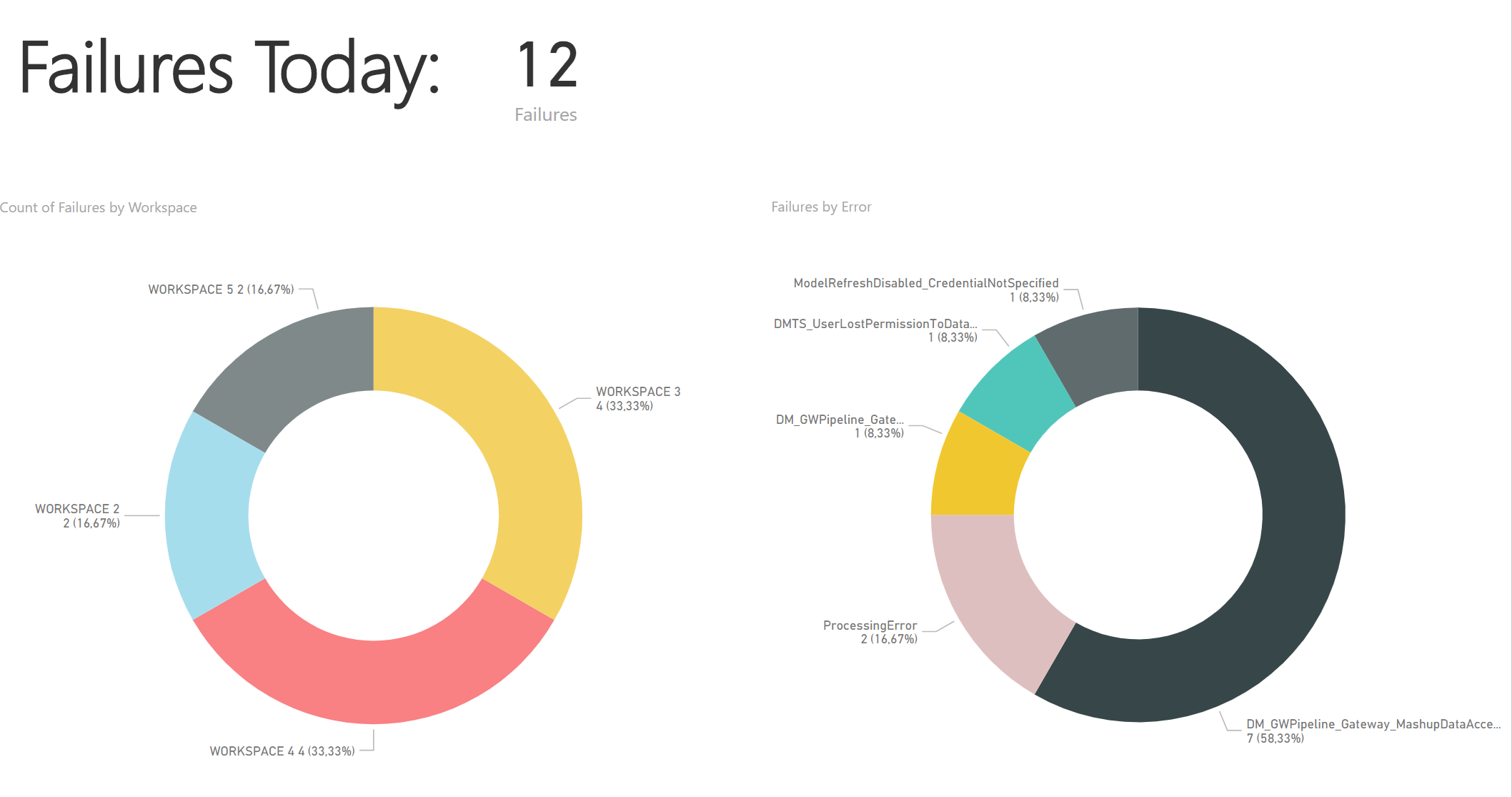

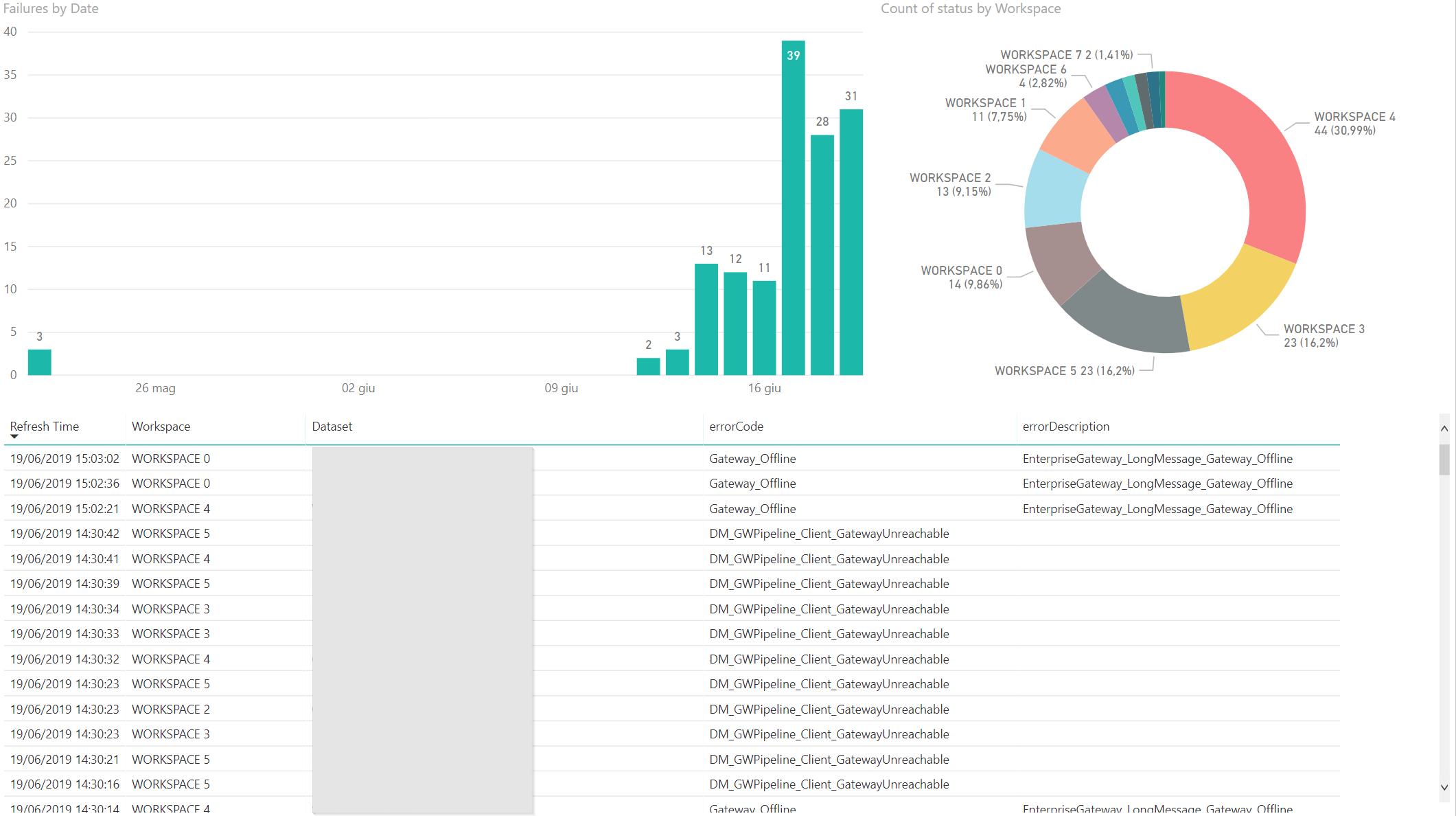

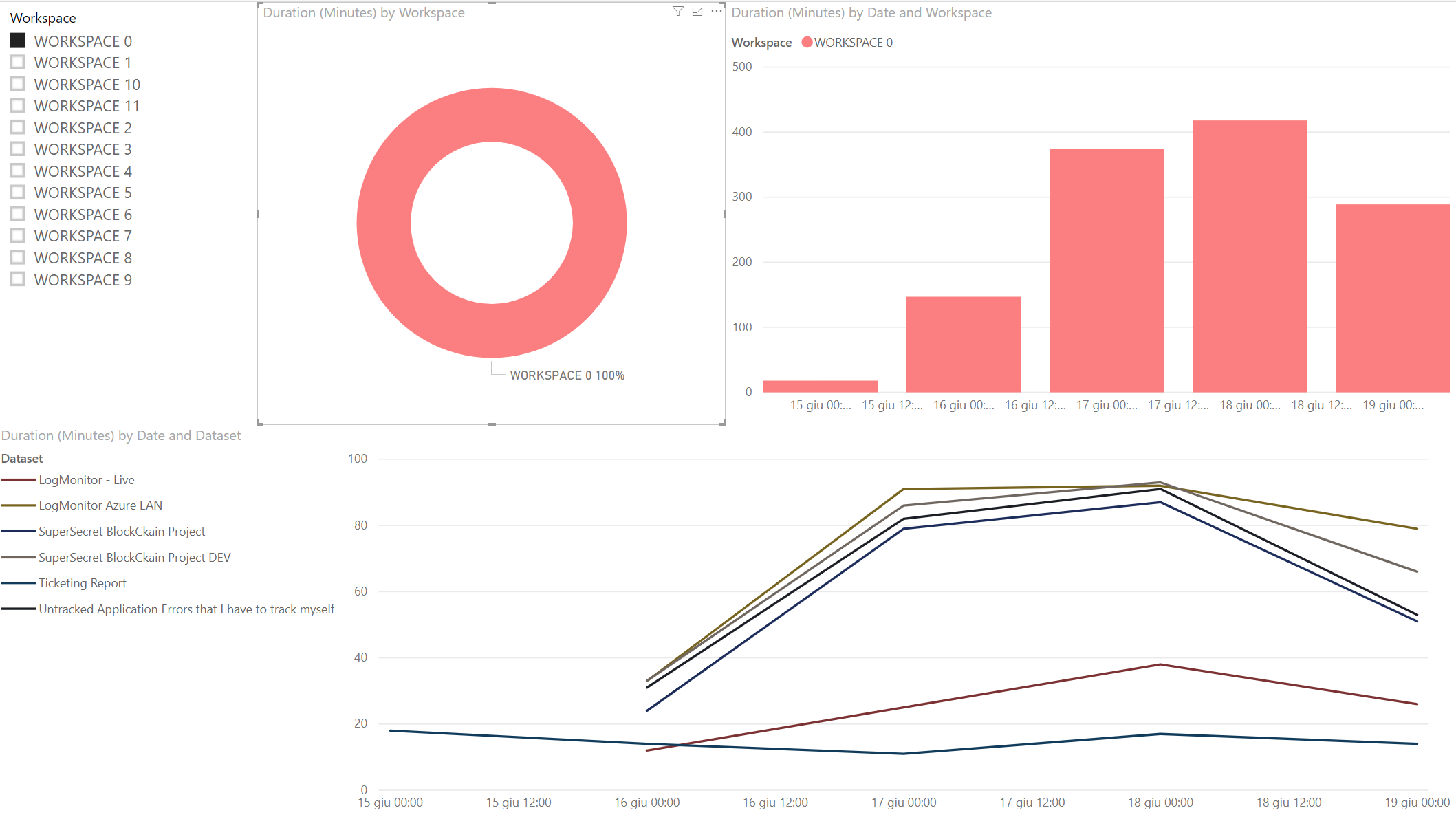

Using this data, I’ve created a PowerBI report that not only shows the refresh failures, but since we were at it we can show the duration of each refresh, in order to spot any unusual trend or strain on the gateway:

In the first page, you’ll find the alerts for today, I expect this to be zero in a good day, which this definitely wasn’t. Errors are sorted by Workspace and Error type

In the second page, you’ll find a deeper overview of the errors over the days, bucketed by day, workspace, number and type of errors in order to understand what’s going on.

The third page is releated to the performance of the single workspace/dataset, using the timestamps in the refresh data we’re able to tell if there is any relevant deviation from the norm and what’s the total time that we’re passing each day refreshing datasets.

You can download the report template from my github here.

The Alerts

There is the fast and easy way, using PowerBi’s Dashboard alerts feature, and there is the more powerful and flexible way that you’re not going to see (but you can still ask me if curious); let’s go with the fast way:

Once that the report has been published in a workspace of your choice, you have to pin the “today’s failures” indicator to a dashboard; in order to do so, just open the report, hover to the visual with the number of failures and click “Pin Visual”, like so:

As you see, it will ask you in which Dashboard to pin it, whatever suits you it’s ok, even a newly created one.

Now, let’s get to that dashboard with the pinned visual, and set up an alert:

At this point you’re probably thinking: “Wait a second, we’ve solved nothing, we’re getting an email if the failure number is greater than 0 in any of the monitored workspaces, but it’s still only one person that gets the email” , and you’ll be right.

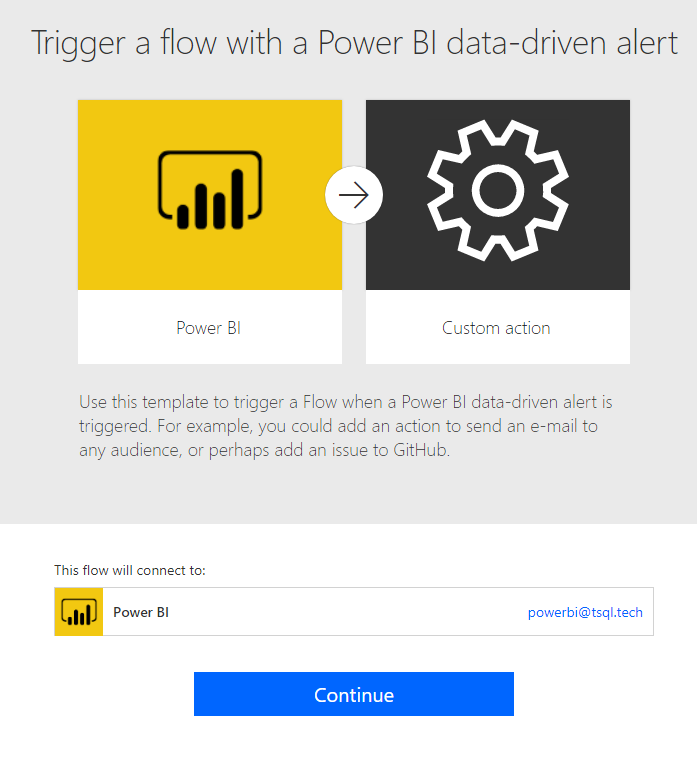

But that’s where the power of Flow comes to the rescue; did you notice the little link in the bottom part of the alerts window? The one that says “Use Microsoft Flow to trigger additional actions” ? Go Ahead, click it.

Boom, the alert it’s a trigger in Microsoft Flow, which means that it can trigger whatever chain of events you want, from simply sending an email to a distribution list, to logging the event, to even send a REST request to PowerBi to try again the refresh if certain conditions are met. The only limit is your fantasy (and the licensing model that your corporation have, really).

Wrap Up

Everything would be way easier if Microsoft simply allowed/enabled admin/general failure notifications for PowerBi Dataset Refreshes; until then, this is a rather linear approach and offers some additional insights on your datasets too. You’re welcome.

Hello,

Even though I am power bi service admin. I am not able to retrieve all datasets refresh history . Only seeing few datasets refresh history . Am i missing something

Thanks

Hi!

You’re not seeing only certain datasets in a workspace or you’re missing whole workspaces?

In the latter case, it’s possibile that they are old Office365 workspaces related to groups that you are not allowed to access? Are you a O365 admin too?

Hello Emanuele,

great post.

You mentioned: “there is the more powerful and flexible way that you’re not going to see (but you can still ask me if curious)”

Now I am curious, how is the more powerful alert solution working?

Thanks!

Hello Torsten,

glad you asked! What I had in mind is not really a breakthrough, to to simply create a Powershell script for alerting too; in that way you could personalize messages, details, recipients etc…

However, the good news is that not long after posting this article Microsoft added the possibility to add whoever you want to the refresh failures (https://powerbi.microsoft.com/en-us/blog/sending-refresh-notifications-to-others/)

Still, in the MS solution you cannot add to the mailing list people from outside your organization without adding a guest account for them too; only in this niche case a custom alert is still relevant imho

Great script, thanks!